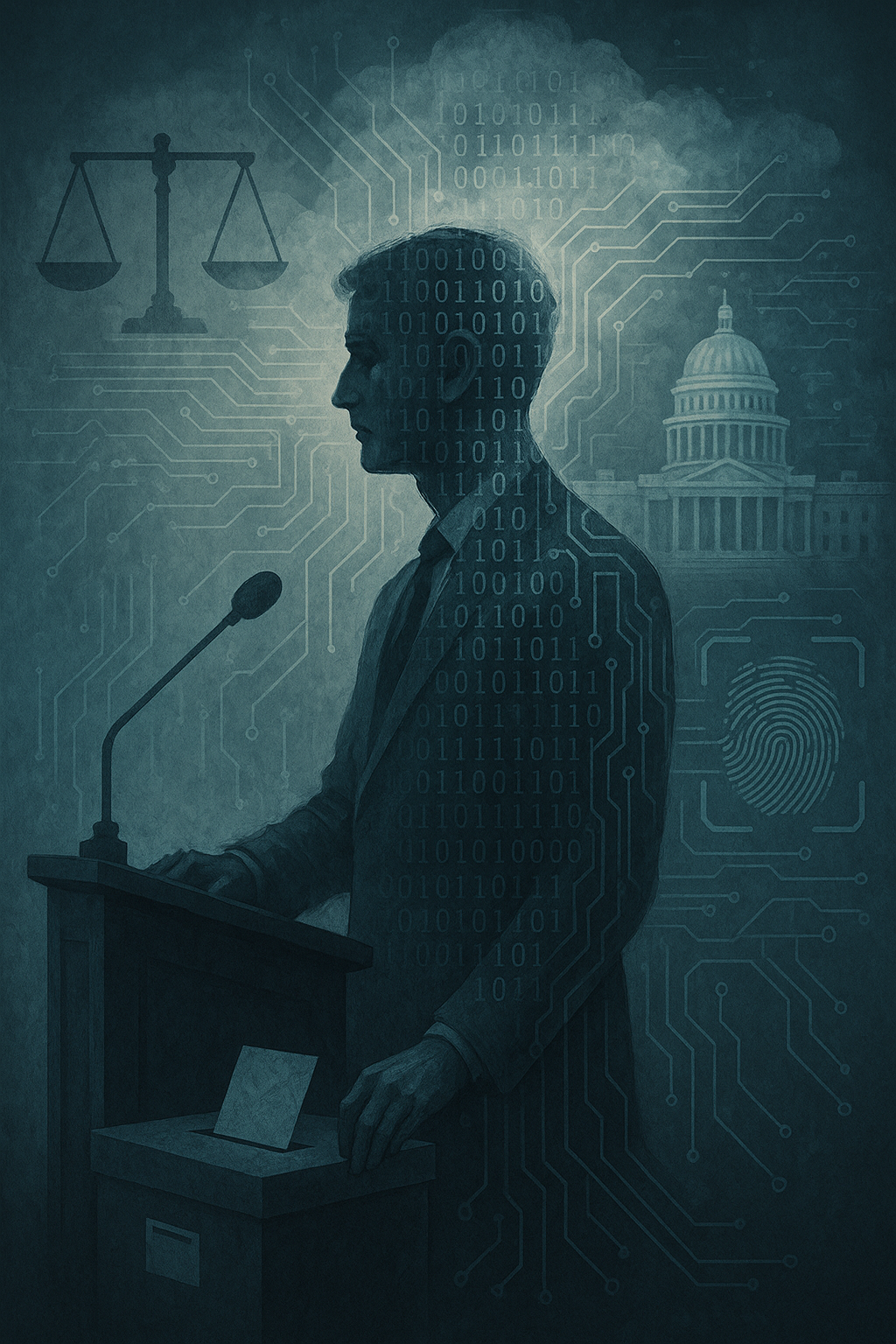

“The machines are learning—fast. But are our democracies keeping up?”

Democracy today stands at the crossroads of history and innovation. While technology once empowered democratic participation—bringing voices closer to the ballot, information to the citizen, and transparency to the corridors of power—it is now threatening to erode the very pillars it aimed to strengthen. The ascent of algorithmic governance, propelled by artificial intelligence (AI), poses a question that strikes at the core of democratic ethics: What happens when decisions affecting millions are no longer made by people, but by opaque algorithms?

As we automate everything from policing to welfare allocation, urban planning to electioneering, a silent revolution is unfolding. And with it comes a crisis of human agency, as accountability slips into code, and public reasoning is replaced by machine learning models. This article navigates the paradox of progress—how AI is both augmenting and undermining democracy—and explores the path forward in reclaiming the democratic spirit in an age of machines.

What is Algorithmic Governance?

Algorithmic governance refers to the use of computational algorithms—often driven by AI and big data analytics—to make or assist decisions traditionally handled by human institutions. In practice, this spans:

- Predictive policing (e.g., COMPAS in the U.S.)

- AI-based recruitment in public jobs

- Welfare fraud detection systems (as seen in the Dutch “Toeslagenaffaire” scandal)

- Algorithmic content moderation and political microtargeting on social media

- Smart cities deploying AI to manage traffic, energy, and surveillance

While these systems offer efficiency, speed, and cost reduction, they also introduce a range of ethical and democratic challenges—from biased outcomes and lack of transparency to weakening civic participation and due process.

The Crisis of Human Agency

Democracy is predicated on deliberation, consent, and accountability. But algorithmic governance subtly displaces these foundational principles:

1. Opacity and the Death of Reasoning

Most algorithms—especially those driven by deep learning—operate as black boxes. Citizens are often unaware of how decisions are made, unable to contest them, and excluded from the processes that affect their lives. This challenges the right to explanation, a key tenet in democratic accountability.

For example, if an AI system denies a loan or flags an individual for welfare fraud, the affected person has little recourse. What input data was used? Was it biased? Who audits the algorithm? In most cases, the answers are unclear, sometimes even to the engineers who designed the system.

2. Bias, Discrimination, and Algorithmic Injustice

Algorithms are trained on historical data—and data reflects societal biases. AI systems have been found to discriminate based on race, gender, caste, or socioeconomic status. In India, the increasing push for AI in governance (e.g., Aadhaar-linked delivery of services) has sometimes exacerbated exclusion due to technical failures or flawed data matching.

This reinforces structural inequality under the guise of neutrality, and codifies discrimination into system logic—making it even harder to detect and dismantle.

3. Erosion of Democratic Deliberation

AI-driven platforms like Facebook, YouTube, and X (formerly Twitter) have become central arenas of political discourse. However, their recommendation algorithms optimize for engagement, not truth. This creates echo chambers, misinformation spirals, and polarization, eroding the democratic fabric.

Cambridge Analytica’s manipulation of voter behavior using psychographic targeting showed how algorithmic nudging can override informed choice, turning citizens into programmable data points.

4. Technocratic Capture and Unelected Power

AI systems are often designed and managed by private tech corporations whose interests may not align with democratic ideals. As states increasingly rely on such systems, power shifts from elected representatives to data scientists and engineers, creating a shadow layer of technocratic governance.

This raises a fundamental question: Who governs the governors of algorithms?

India’s Tryst with Algorithmic Governance

India, with its ambitious push toward Digital India, smart cities, and tech-driven welfare delivery, provides a fertile ground to study the democratic consequences of algorithmic governance.

- The Aadhaar ecosystem, while streamlining services, has led to concerns around exclusion, biometric errors, and privacy violations.

- The use of facial recognition technologies by police without clear legal safeguards has stirred debates about surveillance and civil liberties.

- Recent efforts to integrate AI in judicial processes—like the Supreme Court’s initiative to deploy AI for legal research—hold promise but also risk reducing nuanced interpretation to pattern recognition.

In each case, citizen consent, participation, and awareness remain worryingly low.

Towards Ethical and Democratic AI

AI in governance is not inherently undemocratic. The problem lies in how it is designed, deployed, and monitored. Democracies must now urgently build frameworks that ensure human agency is preserved, not replaced.

1. Transparent Algorithms and Explainability

Governments must ensure that all public-sector algorithms are open to audit, review, and explanation. Citizens have a right to understand decisions affecting them. Explainable AI (XAI) models must become standard.

2. Algorithmic Impact Assessments

Much like environmental impact assessments, democratic states must conduct social and ethical audits of AI systems before deployment. This includes evaluating for bias, inclusivity, and potential harms.

3. Democratic Participation in Tech Design

Civic participation should extend into the design of AI systems. Just as urban plans invite public feedback, so should algorithms that impact education, health, or law enforcement. Participatory design models are the need of the hour.

4. Digital Rights and AI Literacy

A digitally empowered citizenry is essential. Schools, universities, and civil society must invest in AI literacy—not just technical, but ethical and legal. People should know when they are interacting with an algorithm, and how to challenge it.

5. Robust Legal Frameworks

India’s Digital Personal Data Protection Act (2023) is a step forward, but it lacks robust provisions on algorithmic accountability and transparency. A dedicated AI Regulation Bill, balancing innovation with rights, is imperative.

Reclaiming the Democratic Spirit

To navigate democracy in the age of AI is to reassert the primacy of human agency over machine logic. It is to ask not just what algorithms can do, but what they should do—and who gets to decide.

We need a paradigm shift from governing by algorithm to governing the algorithm, from data extraction to data dignity, from surveillance to sovereignty. As citizens, scholars, and policymakers, we must ensure that technology remains a tool of democracy, not its master.

For in this age of AI, the fate of democracy may well hinge not on grand elections or constitutional amendments, but on the silent lines of code we choose—or refuse—to write.

Conclusion

The future of governance is undeniably digital. But democracy is more than data; it is dialogue. As we enter deeper into algorithmic ecosystems, we must bring with us the values that make democracy humane: empathy, accountability, transparency, and participation. The code of democracy must not be reduced to binary logic. It must remain an open source of collective aspiration and shared destiny.